Worldview is not determined by circumstances. We ourselves choose our attitude towards everything that happens around us. What is the difference between someone who remains optimistic despite many adversities and someone who is angry at the world because they pinched their finger? It's all about different thinking patterns.

In psychology, the term "cognitive distortion" is used to describe an illogical, biased conclusion or belief that distorts one's perception of reality, usually in a negative way. The phenomenon is quite common, but if you don’t know what it is expressed in, it’s not easy to recognize. In most cases, this is the result of automatic thoughts. They are so natural that a person does not even realize that he can change them. It is not surprising that many take a passing assessment for an immutable truth.

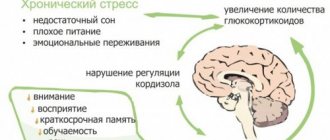

Cognitive distortions cause serious harm to mental health and often lead to stress, depression and anxiety. If not addressed promptly, automatic thoughts become established as patterns and can negatively impact behavior and decision-making logic.

Anyone who wants to maintain a healthy psyche would do well to understand how cognitive distortions work and how they affect our worldview.

3. Hypertrophied sense of duty

Attitudes in the spirit of “must”, “must”, “must” are almost always associated with cognitive distortions. For example: “I should have come to the meeting earlier,” “I should lose weight to become more attractive.” Such thoughts cause feelings of shame or guilt. We treat others no less categorically and say something like: “he should have called yesterday,” “she is forever indebted to me for this help.”

People with similar beliefs often become upset, offended, and angry at those who do not live up to their hopes. But no matter how much we want, we are not able to influence other people’s behavior, and we definitely shouldn’t think that someone “should” do something.

List of cognitive distortions

Photographer Erik Johansson

Cognitive biases are systematic errors in thinking or patterns of bias in judgment that occur in certain situations. The existence of most of these cognitive distortions has been proven in psychological experiments.

Cognitive distortions are an example of evolutionarily developed mental behavior. Some of them have an adaptive function in that they promote more effective actions or faster decisions. Others appear to arise from a lack of appropriate thinking skills, or from the inappropriate application of skills that were otherwise adaptive.

Decision making and behavioral biases

- The craze effect is the tendency to do (or believe in) things because many other people are doing (or believing in) them. Refers to groupthink, herd behavior and delusions.

- The mistake associated with particular examples is ignoring available statistical data in favor of particular cases.

- A blind spot regarding cognitive biases is the tendency to not compensate for one's own cognitive biases.

- Choice bias is the tendency to remember your choices as being better than they actually were.

- Confirmation bias is the tendency to seek or interpret information in a way that confirms previously held concepts.

- Consistency bias is the tendency to test hypotheses exclusively by direct testing, rather than by testing possible alternative hypotheses.

- Contrast effect is the enhancement or depreciation of one dimension when it is compared to a recently observed contrasting object. For example, the death of one person may seem insignificant compared to the deaths of millions of people in the camps.

- Professional deformation is the tendency to look at things according to the rules generally accepted for one's profession, discarding a more general point of view.

- Discrimination bias is the tendency to perceive two options as more different when they occur simultaneously than when they occur separately.

- The endowment effect is the fact that people often want to sell an item for much more than they are willing to pay to acquire it.

- Extreme decision aversion is the tendency to avoid extreme decisions by choosing intermediate ones.

- The focus effect is a prediction error that occurs when people pay too much attention to one aspect of a phenomenon; causes errors in correctly predicting the utility of a future outcome. For example, focusing on who is to blame for a possible nuclear war distracts attention from the fact that everyone will suffer in it.

- The narrow frame effect is using too narrow an approach or description of a situation or problem.

- Frame effects —different conclusions depending on how the data are presented.

- Hyperbolic discount rate is the tendency of people to significantly prefer payments that are closer in time to payments in the more distant future, the closer both payments are to the present.

- Illusion of control is the tendency of people to believe that they can control, or at least influence, the outcomes of events that they actually cannot influence.

- Impact overestimation is the tendency of people to overestimate the duration or intensity of the impact of an event on their future experiences.

- Information seeking bias is the tendency to seek information even when it does not influence action.

- Irrational reinforcement is the tendency to make irrational decisions based on past rational decisions, or to justify actions already taken. It appears, for example, at auctions, when an item is bought above its cost.

- Loss aversion – the negative utility associated with losing an object is greater than the utility associated with acquiring it.

- The familiarity effect is the tendency of people to express unreasonable liking for an object simply because they are familiar with it.

- Moral trust effect - a person who is known to have no prejudices has a greater chance of exhibiting prejudices in the future. In other words, if everyone (including himself) considers a person sinless, then he has the illusion that his any action will also be sinless.

- The need for closure is the need to achieve closure on an important issue, obtain an answer, and avoid feelings of doubt and uncertainty. Current circumstances (time or social pressure) can amplify this source of error.

- The need for contradiction is the faster spread of more sensational, touchy-feely or controversial messages in the open press. A. Gore claims that only a few percent of scientific publications reject global warming, but more than 50% of publications in the press aimed at the general public reject it.

- Denial of probability is the tendency to completely reject probabilistic issues when making decisions under conditions of uncertainty.

- Underestimation of omissions is the tendency to evaluate harmful actions as inferior and less moral than equally criminal omissions.

- Outcome bias is the tendency to judge decisions by their final results, rather than by judging the quality of decisions by the circumstances at the time they were made. (“Winners are not judged.”)

- A planning mistake is the tendency to underestimate the time it takes to complete tasks.

- Post-purchase rationalization is the tendency to convince oneself through rational arguments that the purchase was worth the money.

- confidence effect is the tendency to make risk-averse decisions when the expected outcome is positive, but to take risky decisions to avoid a negative outcome.

- Resistance is the need to do something opposite to what someone encourages you to do, due to the need to resist perceived attempts to limit your freedom of choice.

- Selective perception is the tendency for expectations to influence perception.

- Status quo bias is the tendency of people to want things to remain approximately the same.

- Preference for complete objects - the need to complete a given part of the task. This is clearly demonstrated by the fact that people tend to eat more when offered large portions of food than to take many small portions

- The von Restorff effect is the tendency for people to remember stand-alone, prominent objects better. Otherwise called the isolation effect, the effect of human memory, when an object that stands out from a number of similar homogeneous objects is remembered better than others.

- Zero risk preference is a preference for reducing one small risk to zero rather than significantly reducing another, larger risk. For example, people would prefer to reduce the likelihood of terrorist attacks to zero rather than to see a sharp reduction in road accidents, even if the second effect would result in more lives saved.

Distortions related to probabilities and beliefs

Many of these conative biases are often studied in relation to how they affect business and how they affect experimental research.

- Cognitive bias under conditions of ambiguity is the avoidance of action options in which missing information makes the probability “unknown.”

- The anchoring effect (or anchoring effect) is a feature of human numerical decision-making that causes irrational shifts in responses towards the number that entered consciousness before making a decision. The anchoring effect is known to many store managers: they know that by placing an expensive item (for example, a $10,000 handbag) next to a cheaper but expensive item for its category (for example, a $200 keychain), they will increase sales of the latter. $10,000 in this example is the anchor relative to which the key fob seems cheap.

- Attentional bias is the neglect of relevant information when judging a correlation or association.

- Availability heuristic - assessing as more likely what is more accessible in memory, that is, a bias towards the more vivid, unusual or emotionally charged.

- The cascade of available information is a self-reinforcing process in which the collective belief in something becomes increasingly convincing through increasing repetition in public discourse (“repeat something long enough and it becomes true”).

- The clustering illusion is the tendency to see patterns where there really aren't any.

- Completeness bias is the tendency to believe that the closer the mean is to a given value, the narrower the distribution of a data set.

- Coincidence bias is the tendency to believe that more special cases are more likely than more specific cases.

- Gambler's fallacy is the tendency to believe that individual random events are influenced by previous random events. For example, in the case of tossing a coin many times in a row, a situation may well occur that 10 “tails” will appear in a row. If the coin is "normal", then it seems obvious to many people that the next toss will have a greater chance of landing heads. However, this conclusion is erroneous. The probability of getting the next head or tail is still 1/2.

- The Hawthorne effect is a phenomenon in which people observed in a study temporarily change their behavior or performance. Example: Increased labor productivity at a factory when a commission arrives.

- The hindsight effect —sometimes called the “I knew it would happen” effect—is the tendency to perceive past events as predictable.

- The illusion of correlation is the erroneous belief in the relationship between certain actions and results.

- Gaming Fallacy - Analysis of odds problems using a narrow set of games.

- Observer Expectancy Effect – This effect occurs when a researcher expects a certain outcome and unconsciously manipulates the experiment or misinterprets data to discover that outcome (see also subject expectancy effect).

- Optimism bias is the tendency to systematically overestimate and be overoptimistic about the chances of success of planned actions.

- The overconfidence effect is the tendency to overestimate one's own abilities.

- Positivity bias is the tendency to overestimate the likelihood of good things happening when making predictions.

- The primacy effect is the tendency to overestimate initial events more than subsequent events.

- The recency effect is the tendency to evaluate the importance of recent events more than earlier events.

- Underestimating mean reversion is the tendency to expect the extraordinary behavior of a system to continue.

- The recollection effect is the effect that people remember more events from their youth than from other periods in life.

- Embellishing the past is the tendency to evaluate past events more positively than they were perceived at the time they actually occurred.

- Selection bias is a bias in experimental data that is related to the way in which the data were collected.

- Stereotyping is the expectation of certain characteristics from a group member, without knowing any additional information about his individuality.

- The subadditivity effect is the tendency to evaluate the probability of a whole as less than the probability of its constituent parts.

- Subjective validity is the perception of something as true if the subject's beliefs require it to be true. This also includes perceiving coincidences as relationships.

- Telescope effect - This effect is that recent events appear more distant, and more distant events appear closer in time.

- The Texas Marksman fallacy is choosing or adjusting a hypothesis after the data has been collected, making it impossible to test the hypothesis fairly.

Social distortions

Most of these biases are due to attribution errors.

- Actor bias is the tendency, when explaining the behavior of others, to overemphasize the influence of their professional qualities and underestimate the influence of the situation (see also fundamental attribution error). However, a couple of this distortion is the opposite tendency when assessing their own actions, in which people overestimate the influence of the situation on them and underestimate the influence of their own qualities.

- The Dunning-Kruger effect is a cognitive bias that states that “low-skilled people make erroneous conclusions and poor decisions, but are unable to recognize their mistakes due to their low skill level.” This leads them to have inflated ideas about their own abilities, while truly highly qualified people, on the contrary, tend to underestimate their abilities and suffer from insufficient self-confidence, considering others to be more competent. Thus, less competent people generally have a higher opinion of their own abilities than is characteristic of competent people, who also tend to assume that others evaluate their abilities as low as they do themselves.

- The egocentricity effect occurs when people consider themselves more responsible for the result of certain collective actions than an external observer finds.

- The Barnum Effect (or Forer Effect) is the tendency to rate highly the accuracy of descriptions of one's personality, as if they were deliberately forged specifically for them, but which in reality are general enough to apply to a very large number of people. For example, horoscopes.

- The false consensus effect is the tendency for people to overestimate the extent to which other people agree with them.

- The fundamental attribution error is the tendency of people to overestimate explanations of other people's behavior based on their personality traits, while at the same time underestimating the role and strength of situational influences on the same behavior.

- The halo effect occurs when one person is perceived by another and consists in the fact that the positive and negative traits of a person “flow,” from the point of view of the perceiver, from one area of his personality to another.

- Herd instinct is a common tendency to accept the opinions and follow the behavior of the majority in order to feel safe and avoid conflict.

- The illusion of asymmetric insight - a person thinks that his knowledge about his loved ones exceeds their knowledge about him.

- The illusion of transparency - people overestimate the ability of others to understand them, and they also overestimate their ability to understand others.

- In-group bias is the tendency of people to favor those they perceive as members of their own group.

- The “just world” phenomenon is the tendency of people to believe that the world is “fair” and therefore people get “what they deserve” according to their personal qualities and actions: good people are rewarded and bad people are punished.

- The Lake Wobegon effect is the human tendency to spread flattering beliefs about oneself and consider oneself to be above average.

- Distortion in connection with the formulation of the law - this form of cultural distortion is associated with the fact that writing a certain law in the form of a mathematical formula creates the illusion of its real existence.

- Homogeneity Bias —People perceive members of their own group as being relatively more diverse than members of other groups.

- Projection bias is the tendency to unconsciously believe that other people share the same thoughts, beliefs, values, and attitudes as the subject.

- Self-distortion is the tendency to accept greater responsibility for successes than for failures. It can also manifest as a tendency for people to present ambiguous information in a way that is favorable to themselves.

- Self-fulfilling prophecy is the tendency to engage in activities that will produce results that (consciously or not) confirm our beliefs.

- The justification of a system is the tendency to defend and maintain the status quo, that is, the tendency to prefer the existing social, political and economic order, and to deny change even at the cost of sacrificing individual and collective interests.

- Trait attribution bias is the tendency for people to perceive themselves as relatively variable in personality traits, behavior, and mood, while simultaneously perceiving others as much more predictable.

- The first impression effect is the influence of the opinion about a person that the subject formed in the first minutes of the first meeting on a further assessment of the activities and personality of this person. They are also considered a number of mistakes often made by researchers when using the observation method, along with the halo effect and others.

Belief in a just world

Or fate, karma, whatever you want to call it. Also one of the types of psychological defense. The essence is this: a person believes that the Universe lives according to certain rules and if you follow them, everything will be fine. The criminal will get what he deserves, but a good and kind person will be fine. The boomerang principle, the belief that everyone will be rewarded according to their deeds—that’s all from there.

It is much easier to think this way than to admit that the world is unfair and full of randomness. Many scoundrels live happily ever after, while good people suffer all their lives. So the brain comes up with psychological defenses, otherwise it’s not far from depression.

Harmless faith in the best sometimes leads to extremes. You've probably heard about victim blaming - blaming a crime not on the perpetrator, but on the victim. They say that a raped woman herself provoked a normal person to take certain actions.

Stockholm buyer syndrome

Imagine this situation: a store manager convinced you to buy a product that was five times more expensive than the amount you expected. For example, we ordered an expensive built-in kitchen instead of a simple set from Ikea. You leave the store with the thought “Oh, what a fool, I fell for the seller’s scam!” The thought is unpleasant, and the brain instantly turns on a psychological defense called Stockholm buyer syndrome.

You begin to convince yourself that you spent your money well. This is exactly the kind of kitchen you need, and the IKEA set is nonsense and cheap, so you didn’t overpay for anything, that’s the way it should be. The arguments come naturally - psychological defense works perfectly.

Anchor effect

The first decision is not always the best, but our mind clings to the initial information that literally takes over us.

The anchoring effect, or anchoring effect, is the tendency to greatly overestimate first impressions (anchor information) during decision making. This is clearly evident when estimating numerical values: the estimate is biased towards the initial approximation. Simply put, we always think relative to something rather than objectively.

Research shows that the anchoring effect can explain everything from why you don't get the raise you want (if you initially ask for more, the final number will be high, and vice versa) to why you believe in stereotypes about people you see for the first time in your life.

An illustrative study by psychologists Mussweiler and Strack showed that the consolidation effect works even in the case of initially implausible numbers. They asked participants in their experiment, divided into two groups, to answer the question of how old Mahatma Gandhi was when he died. And first, each group was asked an additional question as an anchor. The first: “Did he die before he was nine years old or after?”, and the second: “Did this happen before he was 140 years old or after?” As a result, the first group assumed that Gandhi died at the age of 50, and the second - at 67 (in fact, he died at the age of 87).

The anchor question with the number 9 caused the first group to give a significantly lower number than the second group, which started from a deliberately inflated number.

It is extremely important to understand the significance of the initial information (whether it is plausible or not) before making a final decision. After all, the first information we learn about something will affect how we treat it in the future.

Selective perception

Do you think your point of view is based on concrete facts? Maybe so, but the brain is able to “pull out” convenient arguments from the flow of information and ignore those that do not agree with our views. He seeks (and finds) confirmation of an already existing point of view, rather than trying to get to the bottom of the truth.

For example, a vegetarian only reads articles about the dangers of eating meat and ignores content about its benefits. And then, foaming at the mouth, they prove to their friends that there is no research on the benefits of meat.

The opponent of GMO foods firmly believes that genetic engineering is the greatest of evils and overlooks research to the contrary. At the same time, a person with selective perception with a blue eye will tell you that he studied the issue, found the materials, and in general there can be no doubt. It very well may: simply for the purpose of psychological protection, the brain “does not notice” inconvenient facts. The conclusions are completely biased.

The Illusion of Correlation

Does your friend think that a person’s character can be determined by his handwriting, his libido level by his driving style, and his mental abilities by how often a person scratches his left heel?

I am glad to report: he has the illusion of correlation - the belief in a non-existent cause-and-effect relationship between events, facts, actions and their results.

Many pseudosciences are based on this cognitive disorder: socionics, graphology, phrenology, Higir’s theory that a person’s name determines his character, as well as clearly occult teachings such as palmistry.

Four Decision Making Mistakes

- Availability heuristic

- Illusory Truth Effect

- Semmelweis reflex

- Confirmation bias

Availability heuristic - This effect is why we see so much ongoing debate on social media. This is an intuitive process of assessing veracity based on the ease of finding an example that confirms this event.

For example, you saw in the article the fact that the average Muscovite gets to work on average in 42.5 minutes. As soon as you saw this, resistance immediately intuitively arose in your head - where does this figure come from? I remember that all my colleagues take more than an hour to get there.

Or another example. It may seem to you that everyone around you shares a healthy lifestyle. You come to this conclusion only because your social media feed is full of similar publications.

The effect of illusory truth . Determine which of the following is not true:

- Lightning doesn't strike twice in the same place

- The Great Wall of China can be seen from space with the naked eye

- "Jihad" translates to "Holy War"

- Hair and nails grow even after death

In fact, none of this is true. And you can easily find confirmation of this.

This effect makes us trust what we have heard many times. Propaganda actively takes advantage of this, because it is so easy to make people believe simply by repeating the same thing.

Ask yourself often: “How do I know this?” and “Can the source be trusted?”

Semmelweis reflex . This is the tendency to maintain beliefs even when there are already facts that contradict them. For example, a countless number of facts have accumulated about the existence of an ancient highly developed civilization, based on the results of LAI expeditions to Egypt, Mexico, Bolivia, Japan, Turkey and other countries, but official science continues to stubbornly turn a blind eye.

If you look at history, an incredibly large number of scientific discoveries are not recognized for a long time after discovery.

Authoritative people tend to actively resist innovation. Therefore, for science to recognize the existence of an ancient civilization, we have to wait until these scientists leave, and young people with a more flexible mind come to take their place.

Confirmation bias . We have certain beliefs and those facts that support us, we like. However, those facts that refute our point of view cause rejection.

People are very sensitive to information that forces them to change their point of view.

Research has shown that when conflicting information enters the brain, the same parts of the brain are activated as during a physical threat.

In other words, when we are criticized, we feel threatened.