Article for the “bio/mol/text” competition: Cellular processes that ensure the exchange of information between neurons require a lot of energy. High energy consumption has contributed to the selection of the most efficient mechanisms for encoding and transmitting information during evolution. In this article, you will learn about the theoretical approach to the study of brain energy, its role in the study of pathologies, which neurons are more advanced, why synapses sometimes benefit from not “firing,” and how they select only the information the neuron needs.

Origin of the approach

Since the mid-twentieth century, it has been known that the brain consumes a significant part of the energy resources of the whole organism: a quarter of all glucose and ⅕ of all oxygen in the case of the great ape [1–5]. This inspired William Levy and Robert Baxter from the Massachusetts Institute of Technology (USA) to conduct a theoretical analysis of the energy efficiency of information encoding in biological neural networks (Fig. 1) [6]. The study is based on the following hypothesis. Since the brain's energy consumption is high, it is beneficial for it to have neurons that work most efficiently - transmitting only useful information and expending a minimum of energy.

This assumption turned out to be true: using a simple neural network model, the authors reproduced the experimentally measured values of some parameters [6]. In particular, the optimal frequency of impulse generation they calculated varies from 6 to 43 impulses/s - almost the same as for neurons at the base of the hippocampus. They can be divided into two groups according to the pulse frequency: slow (~10 pulses/s) and fast (~40 pulses/s). Moreover, the first group significantly outnumbers the second [7]. A similar picture is observed in the cerebral cortex: there are several times more slow pyramidal neurons (~4-9 impulses/s) than fast inhibitory interneurons (>100 impulses/s) [8], [9]. Thus, apparently, the brain “prefers” to use fewer fast and energy-consuming neurons so that they do not use up all their resources [6], [9–11].

Figure 1. Two neurons are shown. In one of them, the presynaptic protein synaptophysin is colored purple. Another neuron is completely stained with green fluorescent protein. Small light specks are synaptic contacts between neurons [12]. In the inset, one “speck” is presented closer. Groups of neurons connected by synapses are called neural networks [13], [14]. For example, in the cerebral cortex, pyramidal neurons and interneurons form extensive networks. The coordinated “concert” work of these cells determines our higher cognitive and other abilities. Similar networks, only made up of different types of neurons, are distributed throughout the brain, connected in a certain way and organize the work of the entire organ.

website embryologie.uni-goettingen.de

What are interneurons?

Neurons of the central nervous system are divided into activating (forming activating synapses) and inhibitory (forming inhibitory synapses). The latter are largely represented by interneurons , or intermediate neurons. In the cerebral cortex and hippocampus, they are responsible for the formation of gamma rhythms in the brain [15], which ensure the coordinated, synchronous work of other neurons. This is extremely important for motor functions, perception of sensory information, and memory formation [9], [11].

Interneurons are distinguished by their ability to generate significantly higher frequency signals than other neurons. They also contain more mitochondria, the main organelles of energy metabolism and ATP production factories. The latter also contain a large amount of proteins cytochrome c oxidase and cytochrome c, which are key for metabolism. Thus, interneurons are extremely important and, at the same time, energy-consuming cells [8], [9], [11], [16].

The work of Levy and Baxter [6] develops the concept of “economy of impulses” by Horace Barlow from the University of California (USA), who, by the way, is a descendant of Charles Darwin [17]. According to it, during the development of the body, neurons tend to work only with the most useful information, filtering out “extra” impulses, unnecessary and redundant information. However, this concept does not provide satisfactory results, since it does not take into account the metabolic costs associated with neuronal activity [6]. Levy and Baxter's extended approach, which focuses on both factors, has been more fruitful [6], [18–20]. Both the energy expenditure of neurons and the need to encode only useful information are important factors guiding brain evolution [6], [21–24]. Therefore, in order to better understand how the brain works, it is worth considering both of these characteristics: how much useful information a neuron transmits and how much energy it spends.

Recently, this approach has found many confirmations [10], [22], [24–26]. It allowed us to take a new look at the structure of the brain at various levels of organization - from molecular biophysical [20], [26] to organ [23]. It helps to understand what the trade-offs are between the function of a neuron and its energy cost and to what extent they are expressed.

How does this approach work?

Suppose we have a model of a neuron that describes its electrophysiological properties: action potential ( AP ) and postsynaptic potentials ( PSP ) (more on these terms below). We want to understand whether it works efficiently and whether it wastes an unreasonable amount of energy. To do this, it is necessary to calculate the values of the model parameters (for example, the density of channels in the membrane, the speed of their opening and closing), at which: (a) the maximum ratio of useful information to energy consumption is achieved and at the same time (b) realistic characteristics of the transmitted signals are preserved [6 ], [19].

Search for the optimum

In fact, we are talking about an optimization problem: finding the maximum of a function and determining the parameters under which it is achieved. In our case, the function is the ratio of the amount of useful information to energy costs. The amount of useful information can be approximately calculated using Shannon's formula, widely used in information theory [6], [18], [19]. There are two methods for calculating energy costs, and both give plausible results [10], [27]. One of them - the “ion counting method” - is based on counting the number of Na+ ions that entered the neuron during a particular signaling event (AP or PSP, see sidebar “What is an action potential”) with subsequent conversion to the number of adenosine triphosphate (ATP) molecules ), the main energy “currency” of cells [10]. The second is based on the description of ionic currents through the membrane according to the laws of electronics and allows one to calculate the power of the equivalent electrical circuit of a neuron, which is then converted into ATP costs [17].

These “optimal” parameter values must then be compared with those measured experimentally to determine how different they are. The overall picture of the differences will indicate the degree of optimization of a given neuron as a whole: how real, experimentally measured, parameter values coincide with the calculated ones. The less pronounced the differences, the closer the neuron is to the optimum and the more energetically it works optimally. On the other hand, a comparison of specific parameters will show in what specific quality this neuron is close to the “ideal”.

Next, in the context of the energetic efficiency of neurons, two processes on which the encoding and transmission of information in the brain are based are considered. This is a nerve impulse, or action potential, thanks to which information can be sent to the “addressee” over a certain distance (from micrometers to one and a half meters) and synaptic transmission, which underlies the actual transmission of a signal from one neuron to another.

Rearranging neural pathways

"Happiness Hormones"

Each person is born with many neurons, but very few connections between them. These connections are built as we interact with the world around us and ultimately make us who we are. But sometimes you have a desire to slightly modify these formed connections. It would seem that this should be easy, because we developed them without much effort on our part in our youth. However, the formation of new neural pathways in adulthood is unexpectedly difficult. Old connections are so effective that giving them up makes you feel like your survival is at risk. Any new nerve chains are very fragile compared to the old ones. When you can understand how difficult it is to create new neural pathways in the human brain, you will be more pleased with your persistence in this direction than berate yourself for the slow progress in their formation.

Action potential

Action potential ( AP ) is a signal that neurons send to each other. APs can be different: fast and slow, small and large [28]. They are often organized in long sequences (like letters in words) or in short, high-frequency “packs” (Fig. 2).

Figure 2. Different types of neurons generate different signals. In the center is a longitudinal section of the mammal's brain. The boxes show different types of signals recorded by electrophysiological methods [15], [38]. a — Cortical (Cerebral cortex) pyramidal neurons can transmit both low-frequency signals (Regular firing) and short explosive, or burst, signals (Burst firing). b - Purkinje cells of the cerebellum (Cerebellum) are characterized only by burst activity at a very high frequency. c — Relay neurons of the thalamus (Thalamus) have two modes of activity: burst and tonic (Tonic firing). d — Neurons in the middle part of the leash (MHb, Medial habenula) of the epithalamus generate low-frequency tonic signals.

[14], figure adapted

The wide variety of signals is due to the huge number of combinations of different types of ion channels, synaptic contacts, as well as the morphology of neurons [28], [29]. Since neuronal signaling processes are based on ionic currents, it is expected that different APs require different energy inputs [20], [27], [30].

What is an action potential?

- Membrane and ions. The plasma membrane of a neuron maintains an uneven distribution of substances between the cell and the extracellular environment (Fig. 3b) [31–33]. Among these substances there are also small ions, of which K+ and Na+ are important for describing PD. There are few Na+ ions inside the cell, but many outside. Because of this, they constantly strive to get into the cage. On the contrary, there are a lot of K+ ions inside the cell, and they strive to leave it. The ions cannot do this on their own, because the membrane is impermeable to them. For ions to pass through the membrane, it is necessary to open special proteins—membrane ion channels.

- Ion channels. The variety of channels is enormous [14], [36], [38], [39]. Some open in response to a change in membrane potential, others - upon binding of a ligand (a neurotransmitter in a synapse, for example), others - as a result of mechanical changes in the membrane, etc. Opening a channel involves changing its structure, as a result of which ions can pass through it. Some channels allow only a certain type of ion to pass through, while others are characterized by mixed conductivity. In the generation of AP, a key role is played by channels that “sense” the membrane potential—voltage-dependent ion channels. They open in response to changes in membrane potential. Among them, we are interested in voltage-gated sodium channels (Na channels), which allow only Na+ ions to pass through, and voltage-gated potassium channels (K-channels), which allow only K+ ions to pass through.

- Ion current and PD. The basis of AP is the ion current—the movement of ions through the ion channels of the membrane [38]. Since the ions are charged, their current leads to a change in the net charge inside and outside the neuron, which immediately entails a change in the membrane potential. Generation of APs, as a rule, occurs in the initial segment of the axon—in that part that is adjacent to the neuron body [40], [14]. Many Na channels are concentrated here. If they open, a powerful current of Na+ ions will flow into the axon, and membrane depolarization will occur—a decrease in membrane potential in absolute value (Fig. 3c). Next, it is necessary to return to its original meaning - repolarization. K+ ions are responsible for this. When the K channels open (shortly before the AP maximum), K+ ions will begin to leave the cell and repolarize the membrane. Depolarization and repolarization are the two main phases of AP. In addition to them, there are several more, which, due to lack of necessity, are not considered here. A detailed description of PD generation can be found in [14], [29], [38], [41]. A brief description of PD is also available in articles on Biomolecule [15], [42].

- Initial axon segment and AP initiation. What causes Na channels to open at the axon initial segment? Again, a change in membrane potential “coming” along the dendrites of the neuron (Fig. 3a). These are postsynaptic potentials (PSPs) that arise as a result of synaptic transmission. This process is explained in more detail in the main text.

- Conducting PD. Na-channels located nearby will be indifferent to the AP in the initial segment of the axon. They too will open in response to this change in membrane potential, which will also cause AP. The latter, in turn, will cause a similar “reaction” on the next section of the axon, further and further from the neuron body, and so on. In this way, AP conduction occurs along the axon [14], [15], [38]. Eventually it will reach its presynaptic terminals (magenta arrows in Fig. 3a), where it can cause synaptic transmission.

- Energy consumption for the generation of APs is less than for the operation of synapses. How many molecules of adenosine triphosphate (ATP), the main energy “currency”, does PD cost? According to one estimate, for pyramidal neurons of the rat cerebral cortex, the energy consumption for generating 4 APs per second is about ⅕ of the total energy consumption of the neuron. If we take into account other signaling processes, in particular synaptic transmission, the share will be ⅘. For the cerebellar cortex, which is responsible for motor functions, the situation is similar: the energy consumption for generating the output signal is 15% of the total, and about half is for processing input information [25]. Thus, PD is far from the most energy-intensive process. The operation of a synapse requires many times more energy [5], [19], [25]. However, this does not mean that the PD generation process does not exhibit energy efficiency features.

Figure 3. Neuron, ion channels and action potential. a — Reconstruction of a candelabra cell in the rat cerebral cortex. The dendrites and body of the neuron are colored blue (blue spot in the center), the axon is colored red (in many types of neurons the axon is branched much more than the dendrites [8], [11], [35]). Green and crimson arrows indicate the direction of information flow: the dendrites and body of the neuron receive it, the axon sends it to other neurons. b - The membrane of a neuron, like any other cell, contains ion channels. Green circles are Na+ ions, blue circles are K+ ions. c — Change in membrane potential during the generation of an action potential (AP) by a Purkinje neuron. Green area: Na channels are open, Na+ ions enter the neuron, depolarization occurs. Blue area: K channels are open, K+ comes out, repolarization occurs. The overlap of the green and blue regions corresponds to the period when simultaneous entry of Na+ and exit of K+ occurs.

[34], [36], [37], figures adapted

AP is a relatively strong in amplitude stepwise change in membrane potential.

Analysis of different types of neurons (Fig. 4) showed that invertebrate neurons are not very energy efficient, while some vertebrate neurons are almost perfect [20]. According to the results of this study, the most energy efficient were the interneurons of the hippocampus, which is involved in the formation of memory and emotions, as well as thalamocortical relay neurons, which carry the main flow of sensory information from the thalamus to the cerebral cortex.

Figure 4. Different neurons are efficient in different ways. The figure shows a comparison of the energy consumption of different types of neurons. Energy consumption is calculated in models with both initial (real) parameter values (black columns) and optimal ones, in which, on the one hand, the neuron performs its assigned function, and on the other, it spends a minimum of energy (gray columns). The most effective of the presented ones turned out to be two types of vertebrate neurons: hippocampal interneurons (rat hippocampal interneuron, RHI) and thalamocortical neurons (mouse thalamocortical relay cell, MTCR), since for them the energy consumption in the original model is closest to the energy consumption of the optimized one. In contrast, invertebrate neurons are less efficient. Legend: SA (squid axon) - squid giant axon; CA (crab axon) - crab axon; MFS (mouse fast spiking cortical interneuron) - fast cortical interneuron of the mouse; BK (honeybee mushroom body Kenyon cell) - mushroom-shaped Kenyon cell of a bee.

[20], figure adapted

Why are they more effective? Because they have little overlap of Na- and K-currents. During the generation of APs, there is always a period of time when these currents are present simultaneously (Fig. 3c). In this case, practically no charge transfer occurs, and the change in membrane potential is minimal. But in any case, you have to “pay” for these currents, despite their “uselessness” during this period. Therefore, its duration determines how much energy resources are wasted. The shorter it is, the more efficient the energy use [20], [26], [30], [43]. The longer, the less effective. In just the two above-mentioned types of neurons, thanks to fast ion channels, this period is very short, and APs are the most effective [20].

By the way, interneurons are much more active than most other neurons in the brain. At the same time, they are extremely important for the coordinated, synchronous operation of neurons, with which they form small local networks [9], [16]. Probably, the high energy efficiency of AP interneurons is some kind of adaptation to their high activity and role in coordinating the work of other neurons [20].

Synapse

The transmission of a signal from one neuron to another occurs in a special contact between neurons, in the synapse [12]. We will consider only chemical synapses (there are also electrical ones), since they are very common in the nervous system and are important for the regulation of cellular metabolism and nutrient delivery [5].

Most often, a chemical synapse is formed between the axon terminal of one neuron and the dendrite of another. His work resembles... “relaying” a relay baton, the role of which is played by a neurotransmitter - a chemical mediator of signal transmission [12], [42], [44–48].

At the presynaptic end of the axon, the AP causes the release of a neurotransmitter into the extracellular environment - to the receiving neuron. The latter is looking forward to just this: in the membrane of the dendrites, receptors - ion channels of a certain type - bind the neurotransmitter, open and allow different ions to pass through them. This leads to the generation of a small postsynaptic potential (PSP) on the dendrite membrane. It resembles AP, but is much smaller in amplitude and occurs due to the opening of other channels. Many of these small PSPs, each from its own synapse, “run” along the dendrite membrane to the neuron body (green arrows in Fig. 3a) and reach the initial segment of the axon, where they cause the opening of Na channels and “provoke” it to generate APs.

Such synapses are called excitatory : they contribute to the activation of the neuron and the generation of AP. There are also inhibitory synapses. They, on the contrary, promote inhibition and prevent the generation of AP. Often one neuron has both synapses. A certain ratio between inhibition and excitation is important for normal brain function and the formation of brain rhythms that accompany higher cognitive functions [49].

Oddly enough, the release of a neurotransmitter at the synapse may not occur at all - this is a probabilistic process [18], [19]. Neurons save energy in this way: synaptic transmission already accounts for about half of all energy expenditure of neurons [25]. If synapses always fired, all the energy would go into keeping them functioning, and there would be no resources left for other processes. Moreover, it is the low probability (20–40%) of neurotransmitter release that corresponds to the highest energetic efficiency of synapses. The ratio of the amount of useful information to the energy expended in this case is maximum [18], [19]. So, it turns out that “failures” play an important role in the functioning of synapses and, accordingly, the entire brain. And you don’t have to worry about signal transmission when synapses sometimes don’t work, since there are usually many synapses between neurons, and at least one of them will work.

Another feature of synaptic transmission is the division of the general flow of information into individual components according to the modulation frequency of the incoming signal (roughly speaking, the frequency of incoming APs) [50]. This occurs due to the combination of different receptors on the postsynaptic membrane [38], [50]. Some receptors are activated very quickly: for example, AMPA receptors (AMPA comes from α- a mino-3-hydroxy-5- m ethyl-4-isoxazole p ropionic acid ). If only such receptors are present on the postsynaptic neuron, it can clearly perceive a high-frequency signal (such as, for example, in Fig. 2c). The most striking example is the neurons of the auditory system, which are involved in determining the location of a sound source and accurately recognizing short sounds such as clicks, which are widely represented in speech [12], [38], [51]. NMDA receptors (NMDA - from N - m ethyl- D - a spartate) are slower. They allow neurons to select signals of lower frequency (Fig. 2d), as well as to perceive a high-frequency series of APs as something unified—the so-called integration of synaptic signals [14]. There are even slower metabotropic receptors, which, when binding a neurotransmitter, transmit a signal to a chain of intracellular “second messengers” to adjust a wide variety of cellular processes. For example, G protein-associated receptors are widespread. Depending on the type, they, for example, regulate the number of channels in the membrane or directly modulate their operation [14].

Various combinations of fast AMPA, slower NMDA, and metabotropic receptors allow neurons to select and use the most useful information that is important for their functioning [50]. And “useless” information is eliminated; it is not “perceived” by the neuron. In this case, you don’t have to waste energy processing unnecessary information. This is another aspect of optimizing synaptic transmission between neurons.

Goals of therapy

Currently, there are no effective treatments for this disease. Therefore, therapy is aimed at:

- slow down the progression of the disease and prolong the period of illness during which the patient does not need constant outside care;

- reduce the severity of individual symptoms of the disease and maintain a stable level of quality of life.

What else?

The energy efficiency of brain cells has also been studied in relation to their morphology [35], [52–54]. Studies show that the branching of dendrites and axons is not chaotic and also saves energy [52], [54]. For example, an axon branches so that the total length of the path that passes through the AP is minimal. In this case, the energy consumption for conducting AP along the axon is minimal.

A reduction in neuron energy consumption is also achieved at a certain ratio of inhibitory and excitatory synapses [55]. This is directly related, for example, to ischemia (a pathological condition caused by impaired blood flow in the vessels) of the brain. In this pathology, the most metabolically active neurons are most likely to fail first [9], [16]. In the cortex, they are represented by inhibitory interneurons that form inhibitory synapses on many other pyramidal neurons [9], [16], [49]. As a result of the death of interneurons, the inhibition of pyramidal neurons decreases. As a consequence, the overall level of activity of the latter increases (activating synapses fire more often, APs are generated more often). This is immediately followed by an increase in their energy consumption, which under ischemic conditions can lead to the death of neurons.

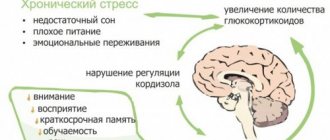

When studying pathologies, attention is paid to synaptic transmission as the most energy-consuming process [19]. For example, in Parkinson's [56], Huntington's [57], and Alzheimer's diseases [58–61], there is a disruption in the functioning or transport to the synapses of mitochondria, which play a major role in the synthesis of ATP [62], [63]. In the case of Parkinson's disease, this may be due to disruption and death of highly energy-consuming neurons of the substantia nigra, which is important for the regulation of motor functions and muscle tone. In Huntington's disease, the mutant protein huntingtin disrupts the delivery mechanisms of new mitochondria to synapses, which leads to “energy starvation” of the latter, increased vulnerability of neurons and excessive activation. All this can cause further disruption of neuronal function with subsequent atrophy of the striatum and cerebral cortex. In Alzheimer's disease, mitochondrial dysfunction (in parallel with a decrease in the number of synapses) occurs due to the deposition of amyloid plaques. The effect of the latter on mitochondria leads to oxidative stress, as well as apoptosis - cell death of neurons.

How many neurons are in the brain

Nerve cells make up about 10 percent of the brain, the remaining 90 percent are astrocytes and glial cells, but their task is only to serve neurons.

Calculating the number of cells in the brain “manually” is as difficult as finding out the number of stars in the sky.

Nevertheless, scientists have come up with several ways to determine the number of neurons in a person.:

- The number of nerve cells in a small part of the brain is calculated, and then the number is multiplied in proportion to the total volume. Researchers start from the postulate that neurons are evenly distributed in our brain.

- All brain cells dissolve. The result is a liquid in which cell nuclei can be seen. They can be counted. In this case, the service cells that we mentioned above are not taken into account.

As a result of the described experiments, it was established that the number of neurons in the human brain is 85 billion units. Previously, for many centuries, it was believed that there were more nerve cells, about 100 billion.

Once again about everything

At the end of the twentieth century, an approach to studying the brain emerged that simultaneously considers two important characteristics: how much a neuron (or neural network, or synapse) encodes and transmits useful information and how much energy it spends [6], [18], [19] . Their ratio is a kind of criterion for the energy efficiency of neurons, neural networks and synapses.

The use of this criterion in computational neuroscience has provided a significant increase in knowledge regarding the role of certain phenomena and processes [6], [18–20], [26], [30], [43], [55]. In particular, the low probability of neurotransmitter release at the synapse [18], [19], a certain balance between inhibition and excitation of a neuron [55], and the selection of only a certain type of incoming information due to a certain combination of receptors [50] - all this helps to save valuable energy resources.

Moreover, the very determination of the energy consumption of signaling processes (for example, generation, conduction of action potentials, synaptic transmission) makes it possible to find out which of them will suffer first in case of pathological disruption of nutrient delivery [10], [25], [56]. Since synapses require the most energy to operate, they are the first to fail in pathologies such as ischemia, Alzheimer’s and Huntington’s diseases [19], [25]. In a similar way, determining the energy consumption of different types of neurons helps to determine which of them will die before others in the event of pathology. For example, with the same ischemia, the interneurons of the cortex will fail first [9], [16]. Due to their intense metabolism, these same neurons are the most vulnerable cells during aging, Alzheimer’s disease, and schizophrenia [16].

In general, the approach to determining energetically efficient mechanisms of brain function is a powerful direction for the development of both fundamental neuroscience and its medical aspects [5], [14], [16], [20], [26], [55], [64]. ].

What does neuroplasticity depend on?

- From the condition of the blood vessels. The more active the blood supply to the brain, the better the neurons work.

- From age. Still, neuroplasticity is higher in young people than in older people.

- From training. The more often we repeat a certain action, the more intensively and methodically we strive to learn, the higher the likelihood that neurons will cooperate to perform that action.

- From the start of training. After an injury or stroke, it is better to start training as early as possible, since otherwise the brain has time to adapt to what is happening (it stops taking into account the damaged functions), and it becomes more difficult to activate them over time.

- From the variety of practice. The more often we use neuroplasticity, the higher it is. When learning a specific skill, the learning ability of our brain in general increases.